Argo CD Architecture Redesigned

One of the common questions we are asked regarding Akuity’s managed Argo CD offering is, what makes it different from open source? On the surface, everything appears to be the same – the Argo CD UI, APIs, functionality, etc… are all identical to its open source version – as they should be. It was important to us that we did not mess with the DNA of what makes Argo CD so popular, namely its developer experience. Our users should stand to benefit from all the continuous improvements of upstream OSS releases, as quickly as they are released. This includes security patches and even pre-release functionality. Layering our own interface in front of Argo CD would have been a mistake, and would only promote vendor lock-in, resulting in delayed feature availability, detracting from the overall experience of Argo CD.

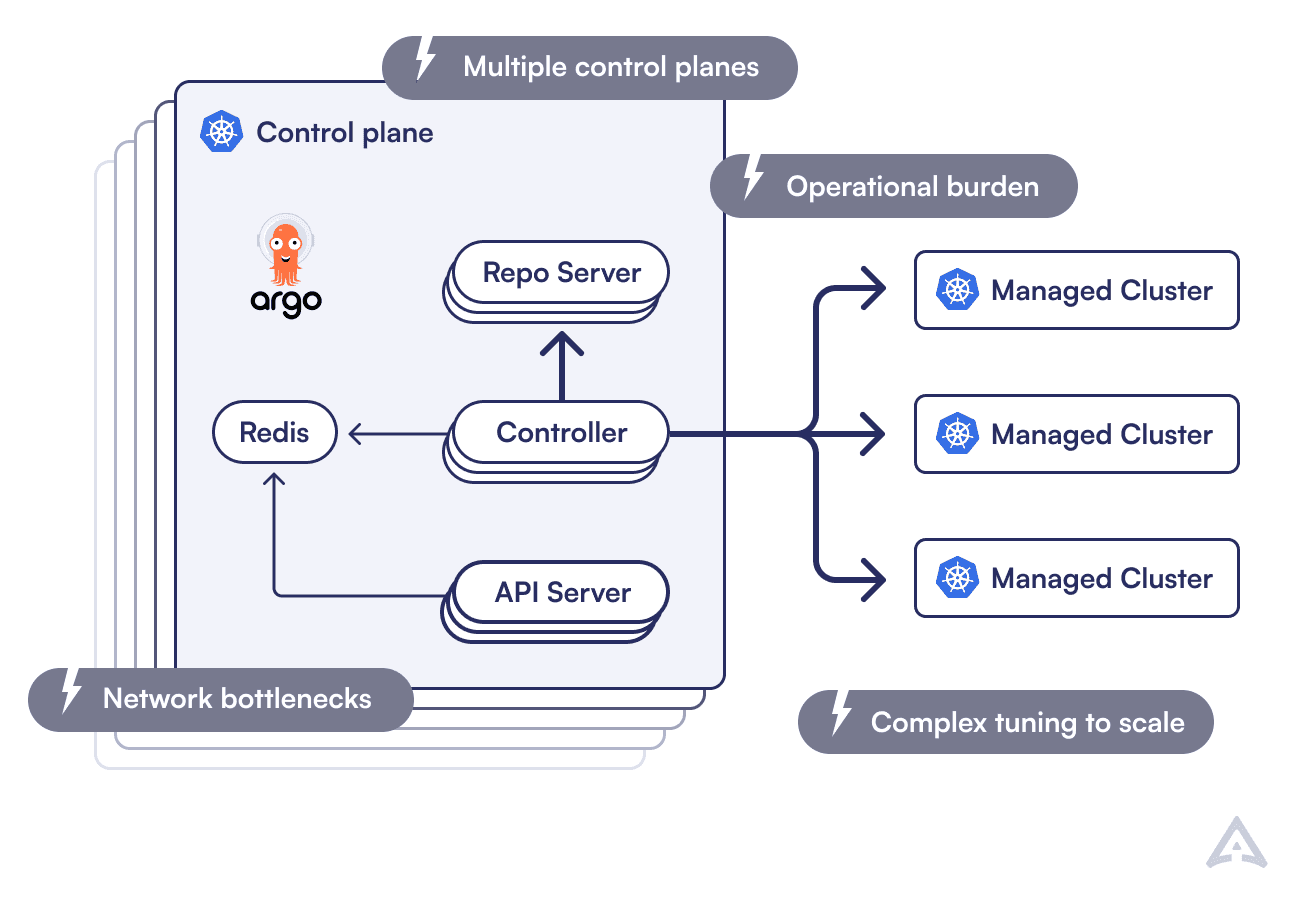

Underneath the covers, a lot of effort went into addressing much of the hidden operational burden of running Argo CD at scale. Having spent years developing, running, and scaling dozens of Argo CD instances against hundreds of clusters at Intuit, we knew precisely where things could be improved to help others who would inevitably run into the same issues.

When designing the Argo CD for SaaS, we saw the opportunity to enhance the architecture in a way that we were never able to before. We realized that an agent-based architecture would address much of the scaling, performance, and operational concerns when managing Argo CD. In this post, we’ll go over the benefits of the Akuity Platform, some of the challenges of the current OSS architecture, and how Akuity’s agent-based architecture addresses them.

Enterprise-Grade Features

On Akuity Platform, we’ve introduced new functionality that up-levels Argo CD for the needs of the enterprise. All Argo CD application deployment activity is persisted long-term, and indexed in a way that allows you to create custom reports of your organization’s deployment velocity and efficiency. Our audit trail records application events, API, and user activity, enabling you to easily discover and react to security liabilities. We’ve also enhanced the functionality of Argo CD itself. Leveraging Argo CD’s extension mechanism, we’ve integrated new functionality directly into Argo CD’s user interface, allowing developers to see sync history, audit trails, or even get assistance from an AI, without ever leaving Argo CD’s interface. All of this is configurable in an easy-to-use management interface that makes it really simple to configure and upgrade.

Performance

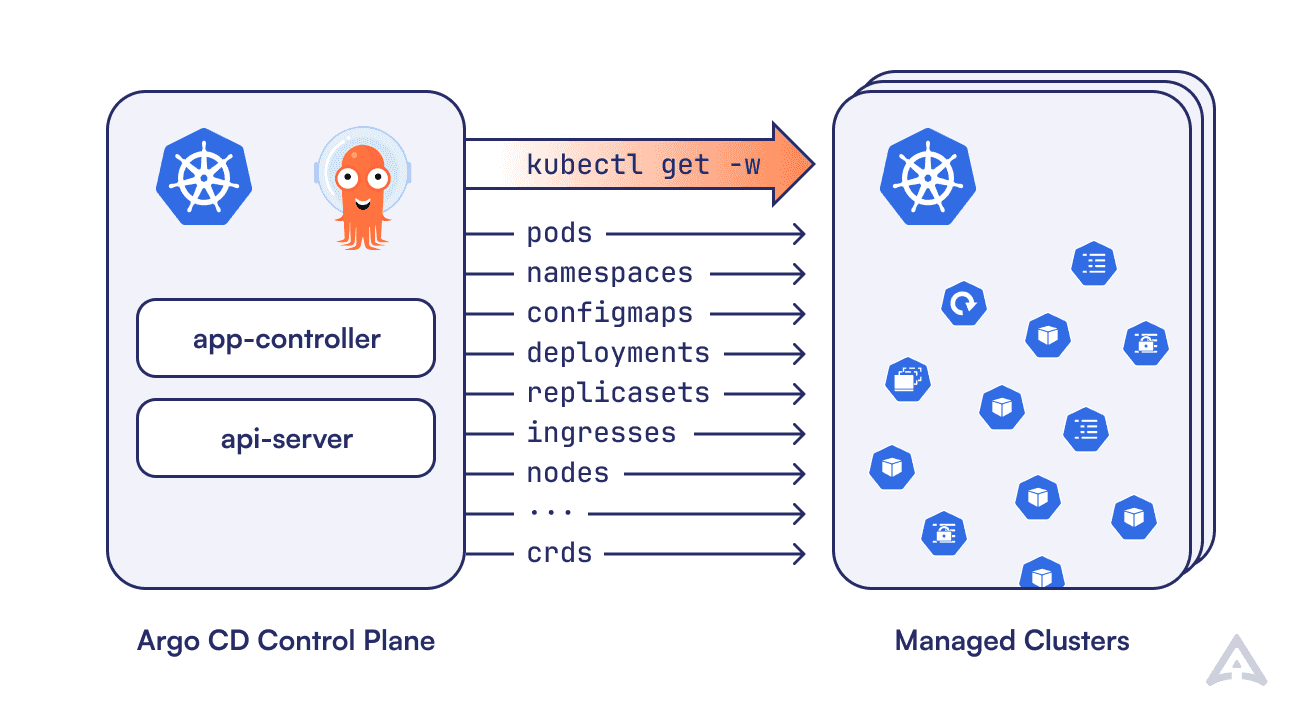

One of the most popular features of Argo CD is its real-time rendering of resources presented in the UI as changes are happening in the Kubernetes cluster. This includes calculating the ownership relationships between resources (e.g. Deployments -> ReplicaSets -> Pods), assessing health changes, and detecting sync status changes. For this to work, a lot is happening under the hood. One of the heavy-weight processes is how the application controller establishes a long-lived Kubernetes watch stream to process all changes to resources for all group kinds in the cluster.

At any given time, a controller may have hundreds, sometimes thousands, of open connections to the Kubernetes clusters it manages (one per resource group/kind installed in each cluster). These remote Kubernetes API servers stream all resource changes over the network back to the Argo CD control plane for processing, even if the update might not be relevant to the application (which is true the vast majority of the time).

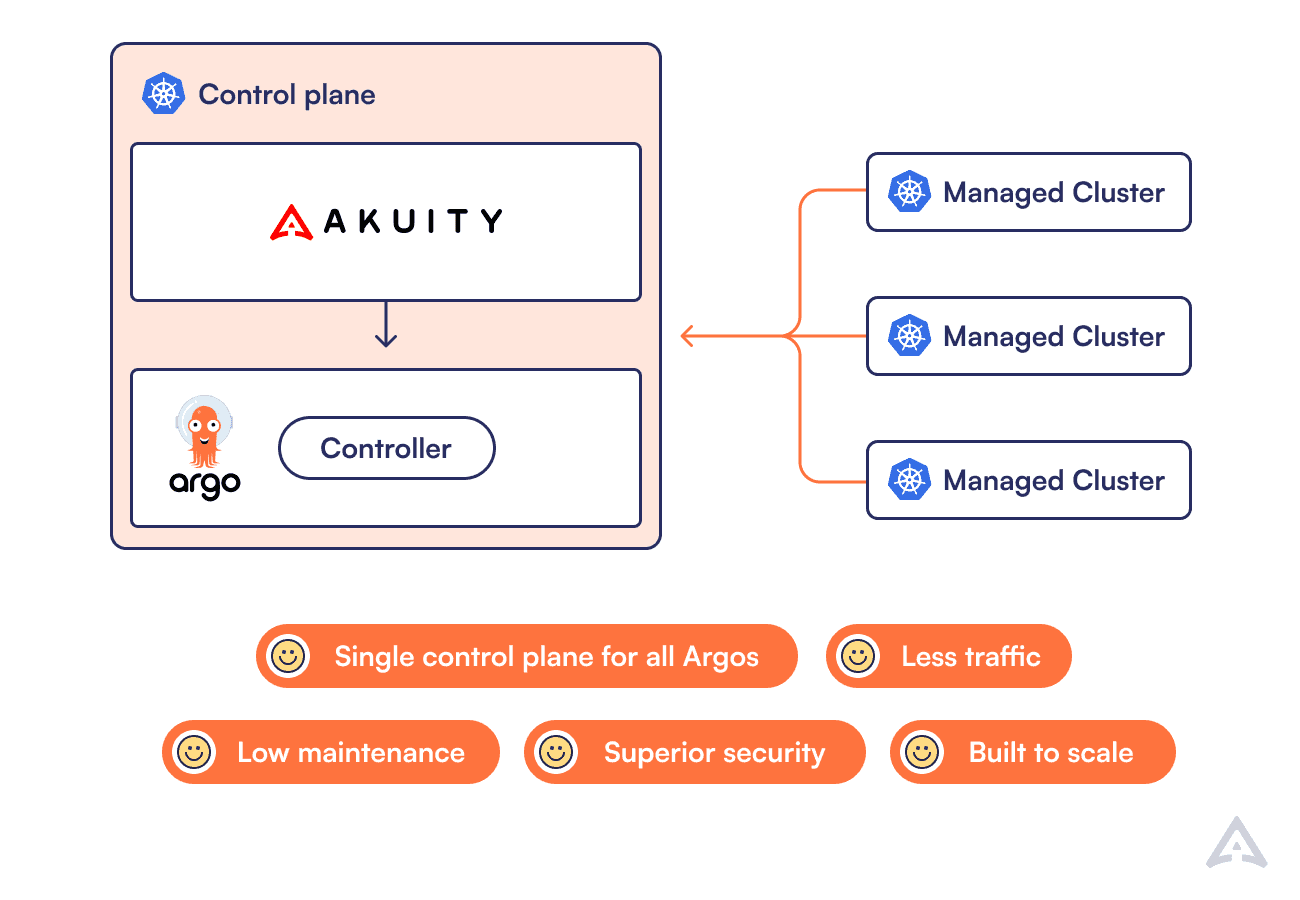

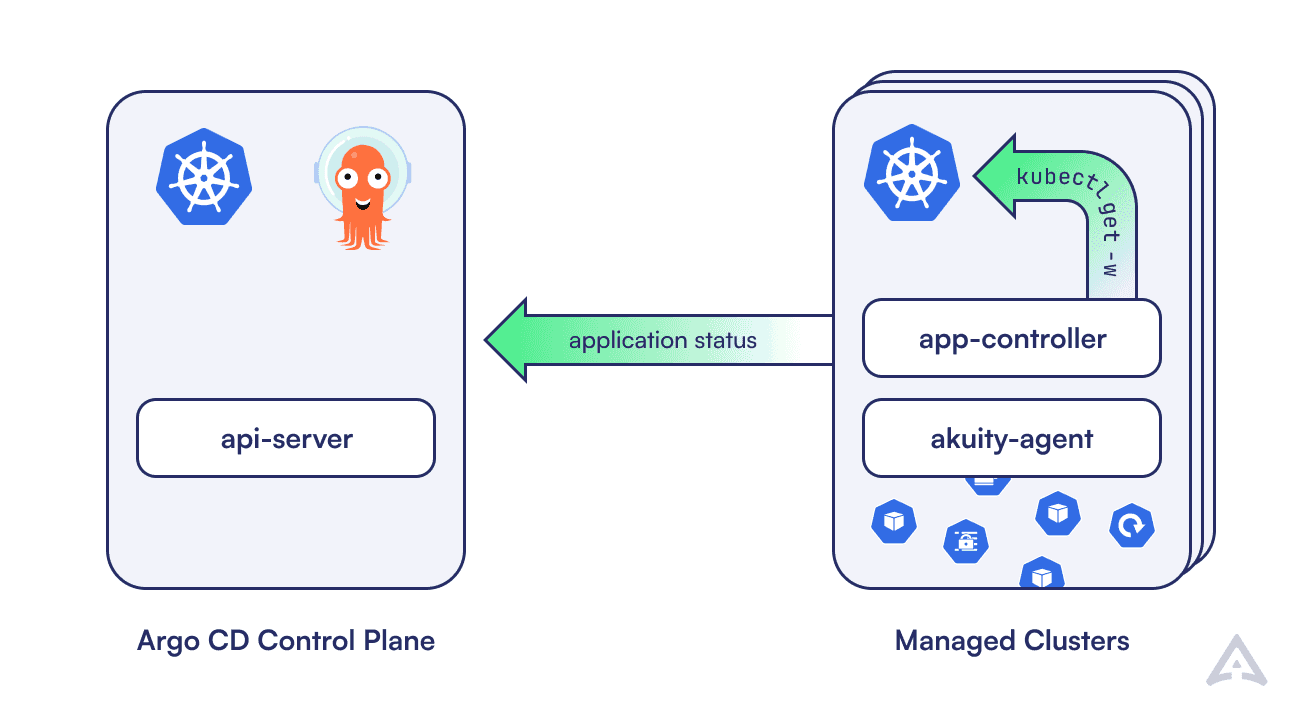

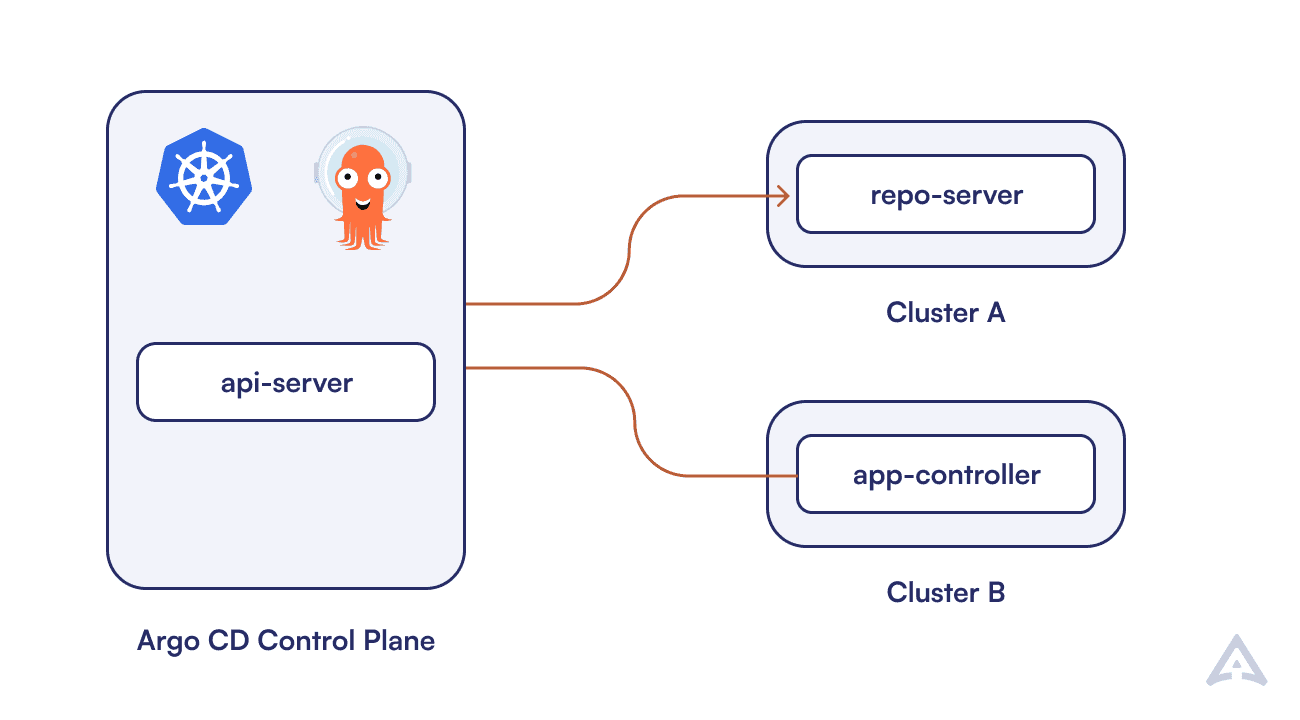

The biggest difference in Akuity’s design is where the controller now runs. Instead of running the application-controller centrally, and watching all resource updates over the network, the application controller runs in each cluster that needs to be managed.

With this new approach, the application-controller watches the Kubernetes API using the in-cluster (https://kubernetes.default.svc) address and processes all resource updates locally. As each resource is processed, an update will be sent back to the control plane only if there is a relevant change to the Argo CD application. With this technique of processing all resource updates in-cluster, you can expect about 80% reduction in bandwidth usage.

Security

Another challenge of the open source Argo CD architecture is its access model to the clusters that it manages. When Argo CD needs to manage a remote cluster, two requirements are needed:

It must be able to directly access the Kubernetes API server. Typically this means the Kubernetes API endpoint must be made public or available via VPC peering, since the control plane Argo CD cluster will be on a different VPC than the cluster it manages.

It must store long-lived credentials to that API server, in order to be able to manage that cluster. This is achieved by generating an argocd-manager ServiceAccount along with a long-lived bearer token for that ServiceAccount, which is subsequently stored in the Argo CD control plane.

Unfortunately, this makes the Argo CD control plane an extremely high-value target for attackers. Because of the security implications, these requirements often dissuade operators from running Argo CD the way it was intended to - as a central management plane for multiple clusters. That’s why many users end up running an Argo CD per cluster.

Akuity’s Argo CD offers a different model. Rather than the Argo CD controller directly accessing the remote Kubernetes API servers of a managed cluster, the application-controller runs inside each of the clusters it manages. This allows the controller to use in-cluster authentication to the Kubernetes API server using normal ServiceAccount authentication with no long-lived credentials. Kubernetes credentials for managed clusters are never stored in the Akuity control plane.

Another benefit of the controller accessing the Kubernetes API server in-cluster, is that the Kubernetes API server no longer needs to be exposed in order for it to be managed by Argo CD. A recommended security best practice these days is to enable private Kubernetes API server endpoint access and to disable public access. If you are originally going through a VPC peering approach, it means no peering and CIDR block management is needed and many networking efforts are saved. This dramatically improves the security posture of your Kubernetes cluster by making the Kubernetes API server only accessible within your VPC or through bastion hosts.

Find out more about unleashing the ultimate Argo CD scalability using Akuity Platform on our blog.

Scalability

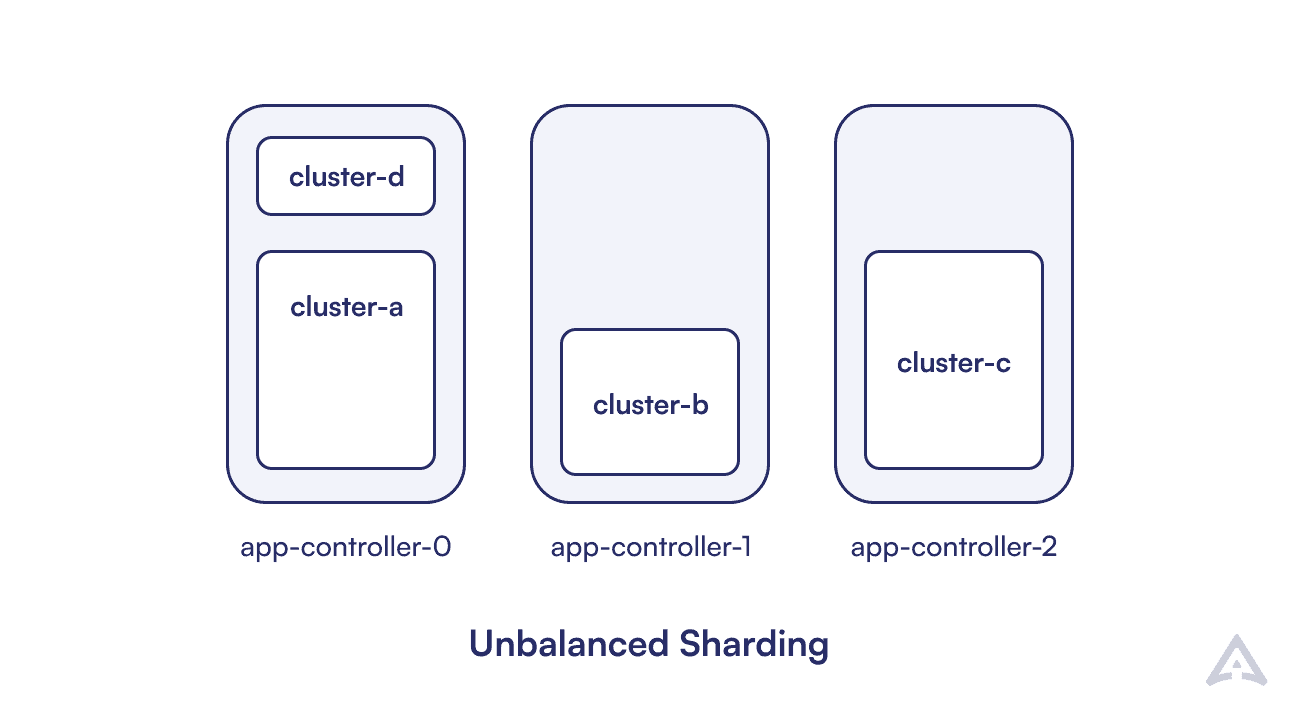

Another challenge with Argo CD is scaling it as additional clusters are managed. The Argo CD application-controller is a CPU and memory-intensive component. This is due to the sheer amount of resource event processing involved necessary to update in real-time. On a cluster with a very high number of resources, or a lot of resource activity, the controller can consume an exorbitant amount of cpu and memory. If you are managing many clusters, this is intensified by the number of clusters managed by a single Argo CD instance.

Eventually, a single controller is not enough, and you must begin sharding your controllers. Sharding works, but the mechanism is quite primitive. When sharding is enabled, clusters are arbitrarily assigned to a controller, with the naive assumption that cluster sizes and activity are more or less homogeneous. But the fact of the matter is, not all clusters are sized the same, and you end up with an imbalance of cluster sizes to controller shards.

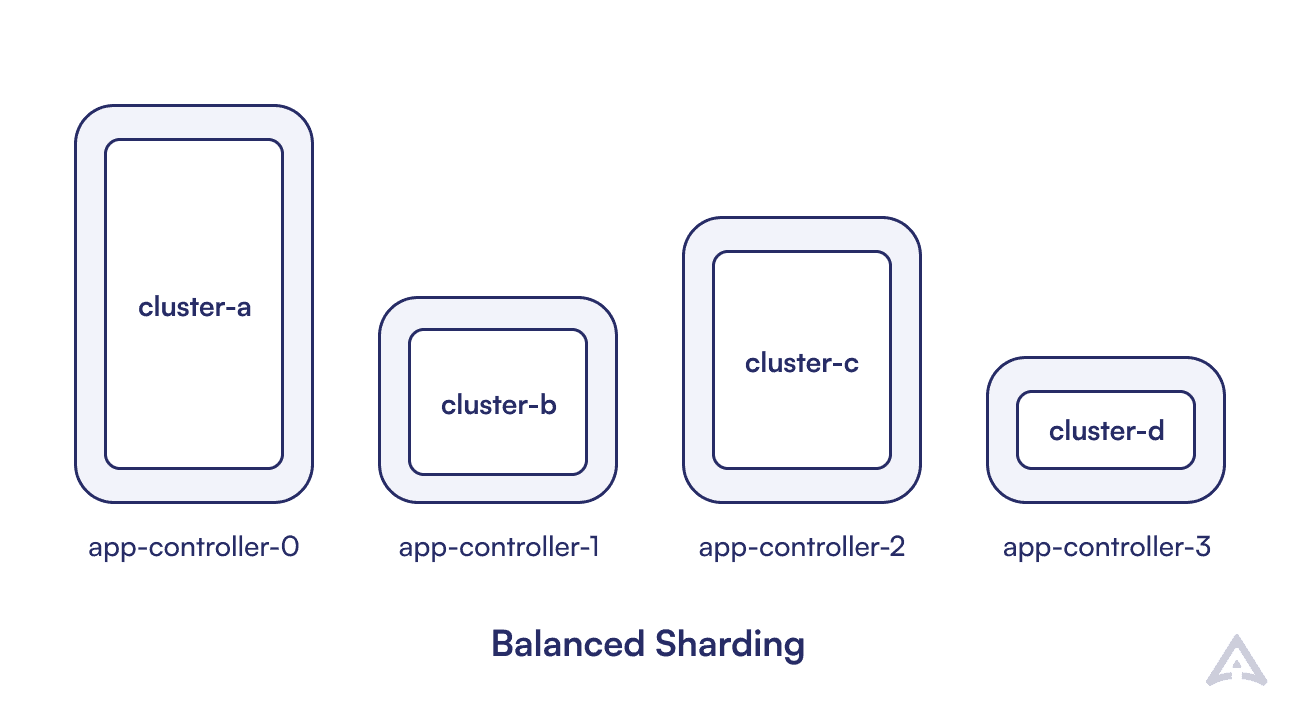

Although there are ways to manually assign shards to achieve better CPU and memory utilization, this is currently a tedious and imperfect process that requires continuous monitoring and tuning. With Akuity’s design, every cluster runs its own controller. This achieves perfect sharding, since each cluster effectively gets its own dedicated controller shard, processing the events of its own cluster with no noise or interference from other clusters. Adding to this, the controller’s resource requirements can be right-sized to exactly match the CPU/memory requirements necessary for that cluster.

Find out more about unleashing the ultimate Argo CD scalability using Akuity Platform on our blog.

Flexibility

Another benefit of Akuity Platform’s design is the added flexibility it provides in deciding where various Argo CD components can run. With open-source Argo CD, there are no options – all components must run in the central control plane. However, many of the users we work with have unique restrictions on where git, or other secured services may be accessed. Using Akuity’s delegation feature, git access can be securely “delegated” to agents running in another cluster, giving you freedom on how to best secure your services.

Find out more about the ultimate Argo CD flexibility with Akuity Platform on our blog.

Summary

When we first built Argo CD, one of the critical design choices was to keep all Argo CD components running in a centralized control plane. This provided a simple architecture, made it easy to operate, and enabled us to iterate faster on the project. The decision proved to work well in the initial years and was arguably the appropriate choice at the time.

Since then, Argo CD has been tasked to process more events and clusters than we ever initially expected, and run in more restrictive environments than we had anticipated. The cracks have started to form and so at Akuity, we saw the opportunity to re-architect the problem in a way that we would if we were to start over.

To try Akuity Platform, start a free trial and have a fully-managed instance of Argo CD in minutes.

You can also schedule a technical demo with our team or go through the “Getting started” manual on the Akuity Documentation website.

Latest Blog Posts

What's New in Kargo v0.5.0

We're back from Kubecon EU '24 in Paris, and there was a lot of buzz around Kargo! We had many conversations with folks talking about their struggles with how…...

March 25, 2024

Argo CD CDK8S Config Management Plugin

If you haven't stored raw kubernetes YAML files in your GitOps repository, you most probably used some sort of tooling that generates YAML files, for example…...

March 14, 2024

Application Dependencies with Argo CD

With Argo CD and GitOps gaining wide adoption, many organizations are starting to deploy more and more applications using Argo CD and GitOps in their workflows…...