Using Akuity Platform with Terraform

Once you created a GKE, EKS, AKS or any other type of Kubernetes cluster you probably want to manage it with your Argo CD instance. But how to put it there? Classic solution for adding a new cluster with a shell command (OSS or AKP) doesn’t appear very declarative and scalable. Let’s have a look at another solution.

At this point you most certainly use Terraform already and declare your cloud infrastructure with the Hashicorp Configuration Language (HCL).

Let me introduce the akuity/akp Terraform provider.

Provider configuration

First download the latest version of the provider:

terraform {

required_providers {

akp = {

source = "akuity/akp"

version = "~> 0.4"

}

}

}

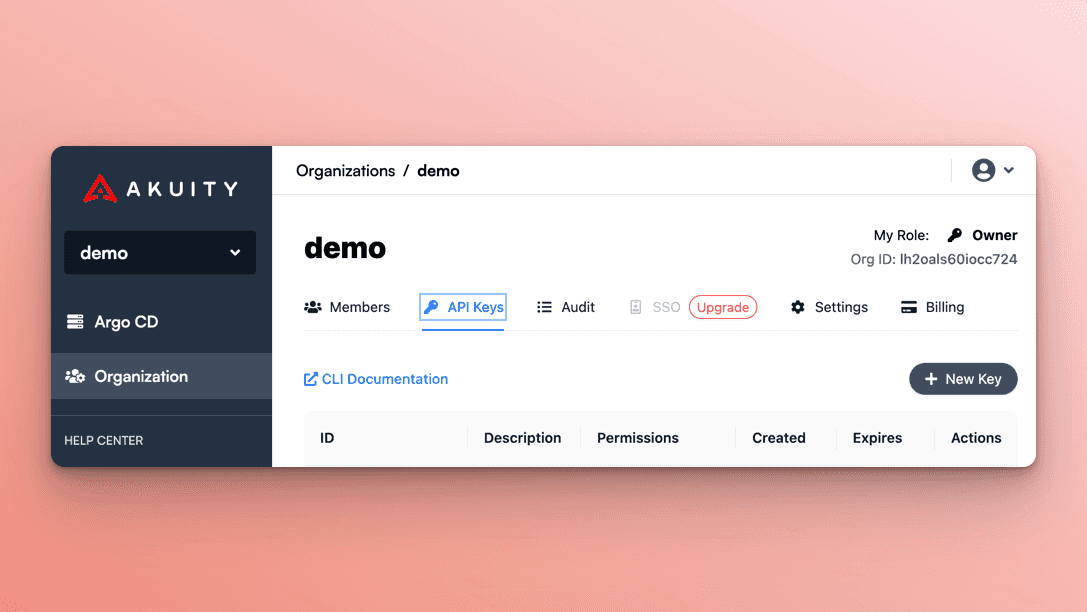

You will need an organization name and API key to access Akuity Platform service:

Find your organization name on the organizations page

Create an API key on “API Keys” tab of your organization. Don’t forget to choose the “Admin” role for the key.

Configure the provider with your organization name and API keys:

provider "akp" {

org_name = "demo"

api_key_id = "key-id"

api_key_secret = "key-secret"

}

Environment variables AKUITY_API_KEY_ID and AKUITY_API_KEY_SECRET work just as well.

Create resources

Now the provider is configured and you’re ready to create a new Argo CD instance, if you still don’t have one:

resource "akp_instance" "example" {

name = "tf-managed-demo"

description = "Terraform managed Argo CD"

version = "v2.6.2"

declarative_management_enabled = true

}

Creating new Argo CD instances with Terraform provider is still in beta – Akuity API is in active development and the schema can change any time, breaking the old provider versions. For production I recommend using

akp_instancedata source instead, referencing to manually created and configured Argo CD instance.

Add your cluster to this instance. This is an example for AKS cluster, assuming that you have declared azurerm_kubernetes_cluster.example resource:

resource "akp_cluster" "test" {

name = "azure-eastus"

size = "small"

namespace = "akuity"

instance_id = akp_instance.example.id

kube_config = {

host = azurerm_kubernetes_cluster.example.kube_config.0.host

username = azurerm_kubernetes_cluster.example.kube_config.0.username

password = azurerm_kubernetes_cluster.example.kube_config.0.password

client_certificate = base64decode(azurerm_kubernetes_cluster.example.kube_config.0.client_certificate)

client_key = base64decode(azurerm_kubernetes_cluster.example.kube_config.0.client_key)

cluster_ca_certificate = base64decode(azurerm_kubernetes_cluster.example.kube_config.0.cluster_ca_certificate)

}

}

That's it. Akuity agent will be installed automatically, and your new cluster is ready.

Of course, this works with any cloud provider, not just Azure.

Read existing resources

What if you already have an Argo CD instance running and configured outside of Terraform?

No problem, just use akp_instance data source instead of the resource.

data "akp_instance" "existing" {

name = "tf-managed-demo"

}

Adding a new cluster to the existing Argo CD is no different. Just specify the correct instance_id field:

resource "akp_cluster" "test" {

…

instance_id = data.akp_instance.existing.id

…

}

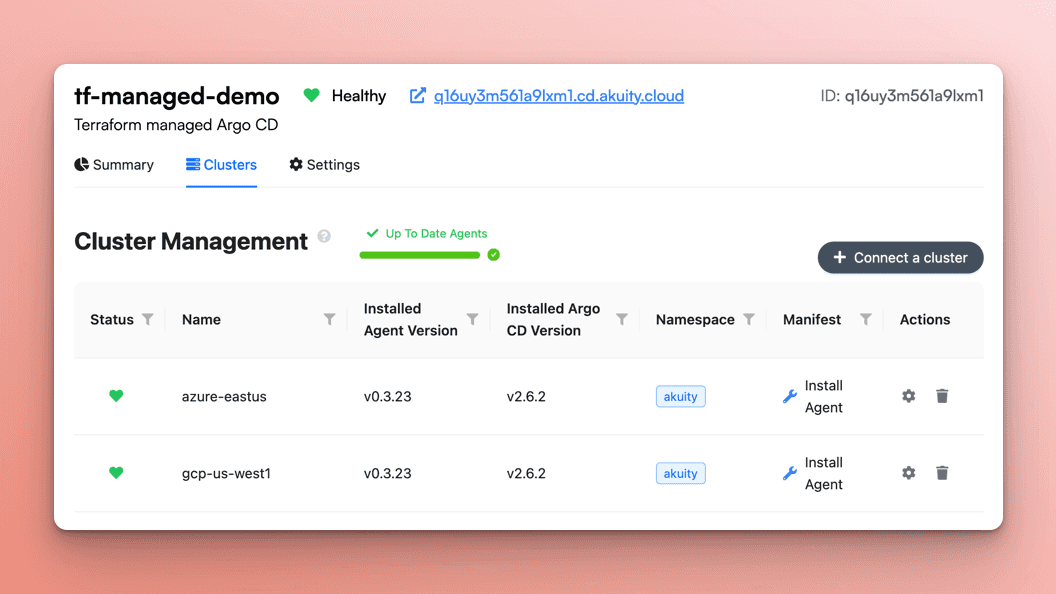

Import Argo CD instances

If you already have an Argo CD instance created, but you want to manage it with Terraform, use terraform import command:

- Notice the name and ID of your Argo CD instance

For example let’s say the name istf-managed-demoand the id isq16uy3m561a9lxm1 - Declare an

akp_instanceresource and ensure the name field is equal to the instance name, e.gresource "akp_instance" "example" { name = "tf-managed-demo" version = "v2.6.2" } - Run Terraform import with proper arguments,

e.g.

terraform import akp_instance.example q16uy3m561a9lxm1

Increase the number of clusters

With Terraform for_each meta-argument you can easily add any number of clusters, and Terraform will create all the clusters simultaneously!

This is an example for adding GKE clusters, assuming each one was created with gke module, and the cluster names/parameters are stored in the local variable clusters:

locals {

clusters = {

gcp-dev-01 = {

env = "dev"

}

gcp-stage-01 = {

env = "stage"

}

…

}

}

module "gke" {

for_each = local.clusters

source = "terraform-google-modules/kubernetes-engine/google//modules/private-cluster"

version = "24.1.0"

name = each.key

cluster_resource_labels = {

env = each.value.env

}

…

}

resource "akp_cluster" "clusters" {

for_each = module.gke

name = each.value.name

size = "small"

instance_id = akp_instance.example.id

labels = each.value.cluster_resource_labels

kube_config = {

host = "https://${each.value.endpoint}"

token = data.google_client_config.default.access_token

cluster_ca_certificate = base64decode(each.value.ca_certificate)

}

}

Some arguments are missing from this example for better readability, but you can figure out yourself how to set up networking, node pools and other stuff.

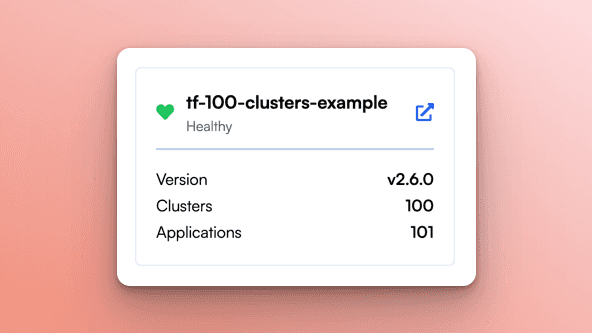

Here is a different example, with namespace-scoped agents instead of cluster-scoped. Adding 100 clusters took me 7 minutes, and half of the time was just waiting for the host cluster to scale up:

Try it Out

To try this out, log in to your user account or start a free trial and have a fully-managed instance of Argo CD in minutes.

If you want any insights on where to start with Akuity or Argo CD, please reach out to our Developer Advocate (Nicholas Morey) on the CNCF Slack. You can find him on the #argo-* channels.

You can also schedule a technical demo with our team or go through the “Getting started” manual on the Akuity Documentation website.

Latest Blog Posts

What's New in Kargo v0.6.0

It’s time for another Kargo release! As we move closer and closer to a GA release; the features and improvements keep coming. Not only are we diligently working...

April 05, 2024

What's New in Kargo v0.5.0

We're back from Kubecon EU '24 in Paris, and there was a lot of buzz around Kargo! We had many conversations with folks talking about their struggles with how…...

March 25, 2024

Argo CD CDK8S Config Management Plugin

If you haven't stored raw kubernetes YAML files in your GitOps repository, you most probably used some sort of tooling that generates YAML files, for example…...